This year's Adobe Max 2022 emphasized 3D design and mixed reality headsets, but the AI-generated elephant in the room was the rise of text-to-image generators like Dall-E. How does Adobe plan to respond to these revolutionary tools? Slowly and carefully, according to the opening speech, but an important feature hidden in the new version of Photoshop shows that the process has already begun.

Near the end of the release notes (opens in a new tab) for the latest version of Photoshop v24.0 there is a beta feature called "Background Neural Filter". What are you doing? Like Dall-E and Midjourney, it allows you to "create a unique background based on the description". All you have to do is enter a background, select "Create" and choose the result you prefer.

However, it is nowhere near Adobe's rival Dall-E. It's only available in Photoshop Beta, a separate testbed from the main app, and is currently limited to tapping colors to produce different photo backgrounds, rather than weird concoctions from the darkest corners of your imagination.

But the "neural background filter" is clear evidence that Adobe is, albeit cautiously, getting more into AI image generation. And his keynote speech at Adobe Max shows that he believes this frictionless method of creating visual images is undoubtedly the future of Photoshop and Lightroom, once the small issue of copyright and ethical standards .

creative co-pilots

Adobe didn't really mention the "Neural Filter Background" coming to Adobe Max 2022, but it did explain where the technology will eventually end up.

David Wadhwani, president of the Adobe Digital Media Business, said the company has the same technology as Dall-E, Stable Diffusion and Midjourney; he simply chose not to implement it in his applications yet. "Over the past few years, we have invested more and more in Adobe Sensei, our artificial intelligence engine. I like to call Sensei its creative co-pilot," Wadhwani said.

“We are working on new features that can take our main flagship apps to whole new levels. Imagine being able to ask your creative co-pilot in Photoshop to add an object to the scene by simply describing what you want or asking your co-pilot. pilot to add an object to the scene. -pilot to give you an alternative idea based on what you have already built. It's like magic,” she added. It certainly goes beyond Photoshop's Sky Replacement tool.

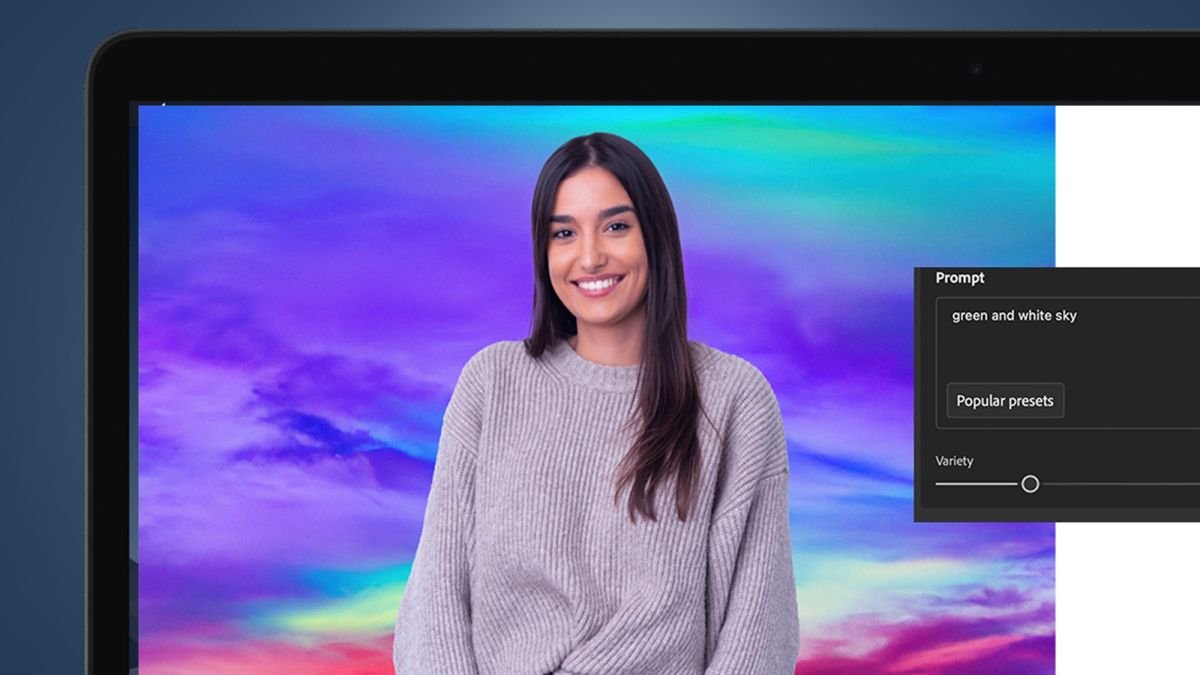

He said this while standing in front of a simulated version of what Photoshop would look like with Dall-E's powers (above). The message was clear: Adobe could render text to image at this scale at this time, but he chose not to.

But it was Wadhwani's Lightroom example that showed how this kind of technology could be more sensibly integrated into Adobe's creative applications.

"Imagine if you could combine 'gen-tech' with Lightroom. So you could ask Sensei to turn night into day, a sunny photo into a beautiful sunset. Move the shadows or change the weather. All of this is possible today with the latest advances in generative technology,” he explained, with a slight nod to Adobe's new rivals.

So why hold back while others steal your AI-generated fries? The official reason, and one that certainly has some merit, is that Adobe has a responsibility to ensure that this new power is not exercised recklessly.

"For those of you unfamiliar with generative AI, you can create an image just from a text description. And we're really excited about what it can do for all of you, but we also want to do it in a thoughtful way," Wadhwani. explained. . "We want to do this in a way that protects, empowers and advocates for the needs of creators."

What does this mean in practice? While still a bit vague, Adobe will move slower and more cautiously than Dall-E. "This is our commitment to you," Wadhwani told the Adobe Max audience. "We approach generative technology from a creator-centric perspective. We believe that AI should enhance human creativity, not replace it, and that it should benefit creators, not replace them."

This partly explains why Adobe, so far, has only gone as far as Photoshop's "Neural Filter Background". But that too is only part of the story.

the long game

Despite being the historic giant of creative apps, Adobe is undoubtedly still very innovative: just take a look at some of the projects at Adobe Labs (opens in a new tab), especially those that can transform objects in the real world into 3D digital assets.

But Adobe is also likely to be caught off guard by fast-moving rivals. The likes of Photoshop and Lightroom were designed as desktop tools, which means Canva stole the advancement of easy-to-use, cloud-based design tools. That's why Adobe spent €20 billion on Figma last month, more than Facebook paid for WhatsApp in 2014.

Does the same thing happen with Dall-E and Midjourney? Probably because Microsoft just announced that Dall-E 2 will be integrated with its new graphic design app Designer (above), part of its 365 productivity suite. AI image generators are reaching the mainstream, despite the Adobe doubts about speed. this happens.

And yet Adobe is also right about the ethical issues surrounding this exciting new technology. There's a massive copyright cloud growing with the rise of AI image generation, and understandably one of the founders of the Content Authenticity Initiative (ICA), which is designed to fight deepfakes and other manipulated content, feel free to do everything. generative AI.

Still, Adobe Max 2022 and the arrival of the "neural background filter" show that AI image generation will no doubt be a big part of Photoshop, Lightroom, and photo editing in general; it may take a little longer for it to appear in your favourite. Adobe application.